Are you building significant Application Programming Interface (“API”) using a Generative AI platform such as Chat GPT-4? Before you conclude your project and release to users, you should engage third-party experts to audit, assess and refine your model. They have techniques to efficiently adapt and fine-tune AI language models for specific domains, industries, and use cases. They can help ensure the application’s compliance with your company policies and industry standards. The right team can also audit and assess, and if necessary, fine-tune the AI again, to comply with governing laws and regulations.

Introduction

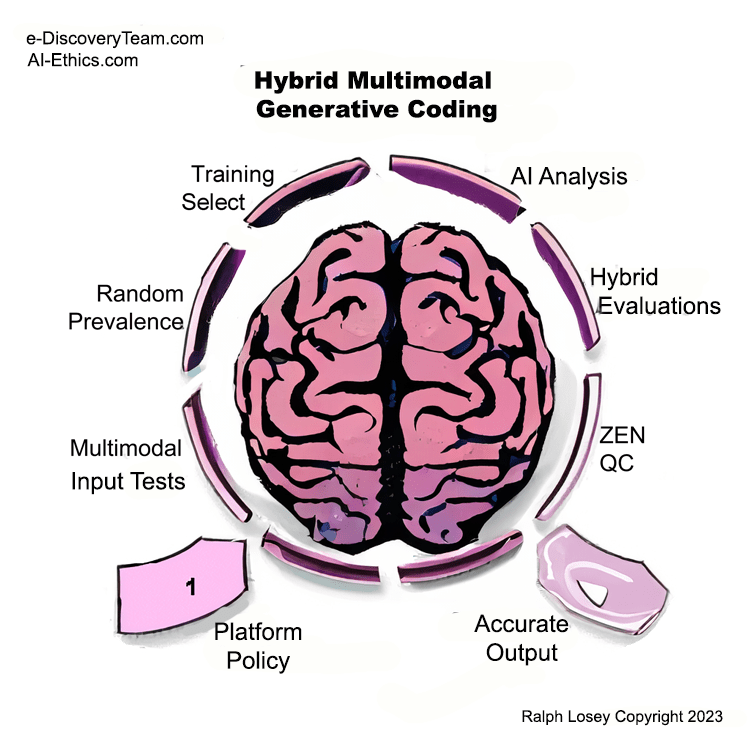

The work flow shown in the e-Discovery Team diagram above is designed by and for professionals trained in AI, Tech and the Law. It illustrates a proven method for the assessment and model refinement – tuning – services that can help minimize the risk of a new application. This procedure can also help ensure that the AI generated output is legally compliant and meets necessary safeguards, including cybersecurity.

Third-party quality control testing and ongoing monitoring are imperative in view of the inherent dangers and risks of all Generative Pre-trained Transformer (“GPT”) based software. Here is a good scholarly introduction to Generative AI:

Generative AI refers to a class of artificial intelligence models that can create new data based on patterns and structures learned from existing data. . . . Generative AI models rely on deep learning techniques and neural networks to analyze, understand, and generate content that closely resembles human-generated outputs. Among these, ChatGPT, an AI model developed by OpenAI, has emerged as a powerful tool with a broad range of applications in various domains.

Partha Ray, ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope, (Internet of Things and Cyber-Physical Systems, Volume 3, 2023, Pages 121-154).

Reinforcement Learning from Human Feedback

As a recent non-technical article by OpenAI makes clear, GPT uses a hybrid, active machine training method that is dependent on human experts for training. (https://lnkd.in/eaNTjStU) This is called a process of Reinforcement Learning from Human Feedback (“RLHF”). For full technical details see OpenAI’s Deep reinforcement learning from human preferences. Also see Illustrating Reinforcement Learning from Human Feedback (Hugging Face, 12/09/22).

The e-Discovery Team has substantial experience with the hybrid RLHF model of machine training and can help large organizations overcome the inherent challenges in significant applications, especially where new data training and confidential, sensitive information is involved. These services can help tune and monitor your applications to reduce risks and maximize benefits.

Bottom line, ChatGPT4 is a great leap forward in automation, but human experts are still in the loop to create a valid “Answer Key” for the GPT and any APIs. Human moderators, aka Subject Matter Experts (“SMEs”), are still needed to leverage their expertise to the neural net to moderate the content generated and ensure reliable responses to user prompts. Your model is only as good as the data itself and the SME trainers who create the gold standard answers for your particular application and data.

GIGO

With AI models like ChatGPT-4, the inherent computer risk remains of GIGO (“Garbage In, Garbage Out”). The garbage answers can not only arise from incorrect SME knowledge and RLHF labeling, but also from hidden bias propagation. This is caused by unconscious SME bias and by bias in the data itself. See Partha Ray, ChatGPT: A comprehensive review (“The performance of ChatGPT can be influenced by the quality and diversity of the training data. Biased training data can lead to biased models, which can have negative consequences in areas such as healthcare, criminal justice, and employment.“)

Although sometimes the errors and hallucinations in the output to user are obvious, some errors and insults can be subtle and hard to detect. So too can data leakage and susceptibility of confidential data theft from cyber attacks. That is where a third-party audit and certification services are invaluable.

Large Project Management Issues

There are also significant management issues that can arise in large corporate projects involving use of Generative AI applications and confidential data. These challenges are usually caused by communication and work coordination barriers between SMEs, Business Units, IT, InfoSec, Legal and End Users. For example, everyone involved in the creation and implementation of AI programs should understand the impact of basic software parameter settings of the custom application, such a “Temperature” and “Top-P.” Creativity and How Anyone Can Adjust ChatGPT’s Creativity Settings To Limit Its Mistakes and Hallucinations (e-Discovery Team, 7/12/23).

RLHF is not only required of the AI, to set-up and tune the application, but also of the end-users. They need good use instruction and training to properly use these powerful new tools. A skilled independent legal tech team can assist in these issues throughout an API project.

AI Enhanced Hybrid Multimodal Training Has Comparatively Long History in Legal Tech

Generative AI training models employ many machine training methods that the e-Discovery Team, and a few others in the legal tech industry, have used in eDiscovery since 2006. That is when e-Discovery Team leader and chief blogger, Ralph Losey, helped his law firm obtain the first legal opinion that approved the use of active machine learning (aka “predictive coding”) in federal court. Much has been learned since that time. The accelerated power of neural net now allows AI to work much faster, smarter and more accurately than ever before, but you must still pick your experts well. One thing everyone in this field has learned is that diversity of views and talent is important.

Due to the GIGO problem, your AI applications should not only include software model alignment procedures, but also random sampling and third party reviews, including our red-team testing, to verify functionality and compliance. Even one hallucination, error or inadvertent disclosure of confidential information can be very damaging. That is part of the e-Discovery Team processes.

The e-Discovery team and a few others have experience with these and related AI issues from thousands of law firm projects. We are, however, the only legal group, aside from Professor Maura Grossman, to have conducted independent research and participated (and won) several open competitions by use of our specialized hybrid multimodal machine training methods. Our new model for Generative AI closely follows these proven methods.

Conclusion

Services from a trained legal tech team can help you to implement your major AI application projects in at least four ways:

1. SME issues. Location, hiring, and supervision of moderators and other AI trainers, including your internal experts, to assist in creation and refinement of your application.

2. Management issues. Assist in coordination and communication between business units, SMEs, Legal, IT, InfoSec and other tech experts and project managers.

3. Quality Control. Set-up defensible general and project specific QC procedures, including statistically sound random sampling, and both internal and independent monitoring. Use Red-team cyber-specialists for initial testing, and later periodic monitoring, to probe for vulnerabilities and potentially dangerous and embarrassing AI responses. We are especially focused on preventing leaks of confidential information.

4. Regulatory Compliance. Establish, implement, refine and monitor an AI audit and compliance program, including compliance with internal corporate policies, and with industry and government regulations. E-Discovery Team has many lawyers who love policy issues, including Ralph Losey, who has extensive hands-on experience with active machine learning in an adversarial setting. We can help keep your corporate policies and compliance up to date in this rapidly changing environment.

Ralph Losey Copyright 2023 — All Rights Reserved