No Matter Who Is Right, We Need AI Ethics Work To Begin Now.

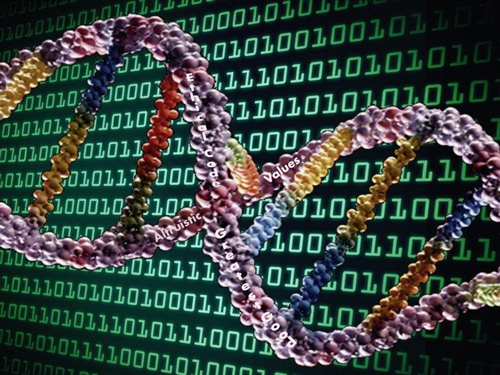

AI-Ethics.com is dedicated to working with interdisciplinary teams to reconcile the divergent views about AI regulation, identify the key challenges and create effective solutions. The first draft of our identification of the key challenges is shown in the featured graphic right. It is part of our study and analysis of the draft principles already created by other groups.

AI-Ethics.com is dedicated to working with interdisciplinary teams to reconcile the divergent views about AI regulation, identify the key challenges and create effective solutions. The first draft of our identification of the key challenges is shown in the featured graphic right. It is part of our study and analysis of the draft principles already created by other groups.

We need lawyers and AI experts to help us in this scholarly work and our other two basic missions: foster dialogue between the conflicting camps; and inspire and educate everyone on the importance of artificial intelligence. Please contact us to join our team. We are still in the early formative stages.

We need lawyers and AI experts to help us in this scholarly work and our other two basic missions: foster dialogue between the conflicting camps; and inspire and educate everyone on the importance of artificial intelligence. Please contact us to join our team. We are still in the early formative stages.

The dire warnings of the great geniuses may well be right, or maybe Kurzweil, Zuckerberg, Domingos, Etzioni and mainstream scientists are right. Either way, the specialized AIs we already have raise many serious issues that we have to deal with now. Think of military drones with autonomous weapons and smart missiles. Musgrave & Roberts, Humans, Not Robots, Are the Real Reason Artificial Intelligence Is Scary (The Atlantic, Aug. 14, 2015); Dom Galeon, Russia Is Building an AI-Powered Missile That Can Think for Itself (Futurism, 7/26/17). We do not want a military AI race between superpowers, but that seems to be where we are headed.

Also think of driverless cars in general and the trolley problems that they will eventually face. Think of how AI technology in social media can already amplify racist and sexist prejudices; how it could upend society by putting millions out of jobs; how it is set to increase inequality; and how it will be used as tool of control by authoritarian governments. Elon Musk dismisses Mark Zuckerberg’s understanding of AI threat as ‘limited’ (The Verge, 7/25/17). Think of the poor depressed Chess and Go players. Christopher Moyer, How Google’s AlphaGo Beat a Go World Champion (The Atlantic, Mar 28, 2016).

All kidding aside, AI Ethics is not a game. Whether AI goes berserk or not, AI Ethics is one of the most important challenges faced by humanity. See AI and the Future of Life Institute. We must concentrate and hack out solid robotic ethics. There is far more to the ethics of artificial intelligence than Asimov’s Three Laws. Also see Machine Ethics (Wikipedia); Bostrom, Nick, and Eliezer Yudkowsky, “The Ethics of Artificial Intelligence.” (Cambridge University Press); Towards a Code of Ethics in Artificial Intelligence with Paula Boddington (Future of Life Institute,

Many computer scientists agree with the need to address AI Ethics now, not later, including well-known computer scientist, Ben Shneiderman, founder of the U. of Maryland Human-Computer Interaction Lab. In May 2017 keynote speech at the annual Touring Awards he called for establishment of a National Algorithm Safety Board. Thomas Macaulay, Pioneering computer scientist calls for National Algorithm Safety Board (Tech World, 5/31/17). You can watch the complete speech Shneiderman made here.

Many computer scientists agree with the need to address AI Ethics now, not later, including well-known computer scientist, Ben Shneiderman, founder of the U. of Maryland Human-Computer Interaction Lab. In May 2017 keynote speech at the annual Touring Awards he called for establishment of a National Algorithm Safety Board. Thomas Macaulay, Pioneering computer scientist calls for National Algorithm Safety Board (Tech World, 5/31/17). You can watch the complete speech Shneiderman made here.

Shneiderman said the Safety Board would apply to all algorithms, not just AI, and provide three forms of independent oversight: planning, continuous monitoring, and retrospective analysis. He contends this independent oversight is needed now “to mitigate the dangers of biased, faulty or malicious algorithms.” Shneiderman points to currently existing AI guided systems in healthcare, air traffic control, and nuclear control rooms where “algorithms can be biased, harmful, and even deadly.” Shneiderman also explained that “The goal is to clarify responsibility, so as to accelerate quality. That’s the key thing.”

Whether superintelligence ever comes or not, there is a current need for regulation. As Macaulay points out in his Tech World article:

Public trust in algorithms has been damaged in recent months following a spate of scandals around them propagating biases, manipulating public opinion, and increasing inequalities. They’ve been used to determine who gets job interviews or loans, in courtrooms to falsely flag black defendants as future criminals in court sentencing and to misinform the public in political campaigns.

Shneiderman’s call for a National Algorithm Safety Board is consistent with, maybe even more aggressive than Elon Musk’s controversial statements at the 2017 National Governors Association Conference. He warned state governors of the dangers of Artificial Intelligence and called for preliminary work on regulations now. Here is the Full transcript of Elon Musk’s discussion of AI at the Governors Conference.

Shneiderman’s call for a National Algorithm Safety Board is consistent with, maybe even more aggressive than Elon Musk’s controversial statements at the 2017 National Governors Association Conference. He warned state governors of the dangers of Artificial Intelligence and called for preliminary work on regulations now. Here is the Full transcript of Elon Musk’s discussion of AI at the Governors Conference.

We at AI-Ethics are not taking sides on the superintelligence debate, but we do favor consideration of regulations now. We take seriously the warnings about unregulated AI. Far future or not, AI is already having a significant impact on employment, policing, politics, news, elections, marketing, consumer behavior, medicine, law, transportation, the stock market and the military. It already raises serious ethical issues and legal issues, including liability issues. These legal issues are here and now, even if the super-intelligence apocalypse is not. The time to begin work on global regulations is now.

It seems obvious to AI-Ethics.com that certain basic values should be built into the DNA code of AI, 3D-printed into the silicon. What should that code say? Do? Prevent? Allow? The time to start addressing these questions is now, not tomorrow. Contact us with your thoughts and proposals or leave a Comment below. We will add your name to a private mailing list. We will not voluntarily release your information. We will listen to all input. We need your help to figure this out, to create basic principles and eventually specific regulations. We also need help to put on public events to foster dialogue between the conflicting camps and to inspire and educate everyone on the importance of artificial intelligence.

We do not know the answers, but we do know a process to get us there. A process of open, in-person dialogues that take place at multidisciplinary conferences. Our goal is to be inspirational and meritocratic. We would also like to hear your ideas and proposals?

The events we put on will be open to and include everyone: technologists, AI specialists, scientists, businessmen and women, economists, ethics specialists, philosophers, professors, students, lawyers, regulators, patent clerks, judges, hackers and far-out techies of all types, plus all other concerned citizens from a wide variety of fields. In short, we will be open to all and everyone from all countries. Our AI-Ethics team goal is to be as interdisciplinary and human-diverse inclusive as possible. Yes, we will allow AI entities someday, but only after they pass a bona fide Turing test.

Our vision for AI-Ethics includes multiple conferences to inspire, attract, and start dialogues and spin-off working groups. The working groups will write papers for consideration by the larger body and then the general public, including government regulators. We propose to start by formulating the basic Principles of AI Ethics. The papers created would include specific proposals of principles. Later publications would include model proposed best practices, standards, rules and regulations. The reasoning and research behind each principle or rule would be explained in the papers. Comments would be solicited and considered from everyone, including open public comments.

The location for the initial in-person event would be near our center of operations, not too far from the Kennedy Space Port in Cape Canaveral, Florida. We do not have a time set yet. Contact us if you have any suggestions or might want to volunteer your time or funds to help make this happen. This is a non-profit group and event.

The focus of the initial conference will likely be on AI Ethics Principles. It would spin-off various working-groups to create consensus writings, including any dissents. We invite everyone to join with us in this initial kick-off event in Florida. Again, no date yet, but will let you know. Contact us to join our team, or at least to get on our mailing list. We will not voluntarily release your information.

[…] We would certainly like to hear more. As Oren said in the editorial, he introduces these three “as a starting point for discussion. … it is clear that A.I. is coming. Society needs to get ready.” That is exactly what we are saying too. AI Ethics Work Should Begin Now. […]

LikeLike

[…] We would certainly like to hear more. As Oren said in the editorial, he introduces these three “as a starting point for discussion. … it is clear that A.I. is coming. Society needs to get ready.” That is exactly what we are saying too. AI Ethics Work Should Begin Now. […]

LikeLike

[…] the conclusion to the article, Barfield and Williams opine, as we do, that immediate actions are required now to prepare for likely future developments in AI, including […]

LikeLike

[…] action, if not voluntarily by social media providers, such as Facebook and Twitter, then by law. AI Ethics Work Should Begin Now. Etzioni’s proposed second rule of AI ethics should be adopted immediately: An A.I. system must […]

LikeLike

[…] There is a current compelling need to have some general guidelines in place for this research. AI Ethics Work Should Begin Now. We still have a little time to develop guidelines for the advanced AI products and services […]

LikeLike

[…] There is a current compelling need to have some general guidelines in place for this research. AI Ethics Work Should Begin Now. We still have a little time to develop guidelines for the advanced AI products and services […]

LikeLike